Hardening the ElastiCache Benchmark: Observable Lifecycle & Durable S3 Exports

AWS ElastiCache Lab (built on Amazon ElastiCache) is a repeatable performance harness for comparing cache configurations under controlled load. Each run is time-boxed, produces exportable artifacts, and tears down deterministically to keep both cost and comparability under control.

As the harness scales up (more Amazon ECS tasks, higher memory fill rate), the bottleneck often moves away from ElastiCache itself and toward the lifecycle boundary: shutdown, exports, and verification. The engine can change (Redis today, Valkey next), the boundary and evidence pipeline should not.

Two earlier posts frame the problem: a shutdown semantics bug that caused silent overrun (Shutdown Didn't Happen: Placeholder Semantics Bug), and the calibration work needed to reliably drive the cache to the boundary state (Designing for the Cliff: Calibrating Load to Reach maxmemory in a 1-Hour Run). This follow-up hardens the lifecycle boundary: make it observable and make the artifacts durable. If teardown or exports are unreliable, benchmark output isn't defensible.

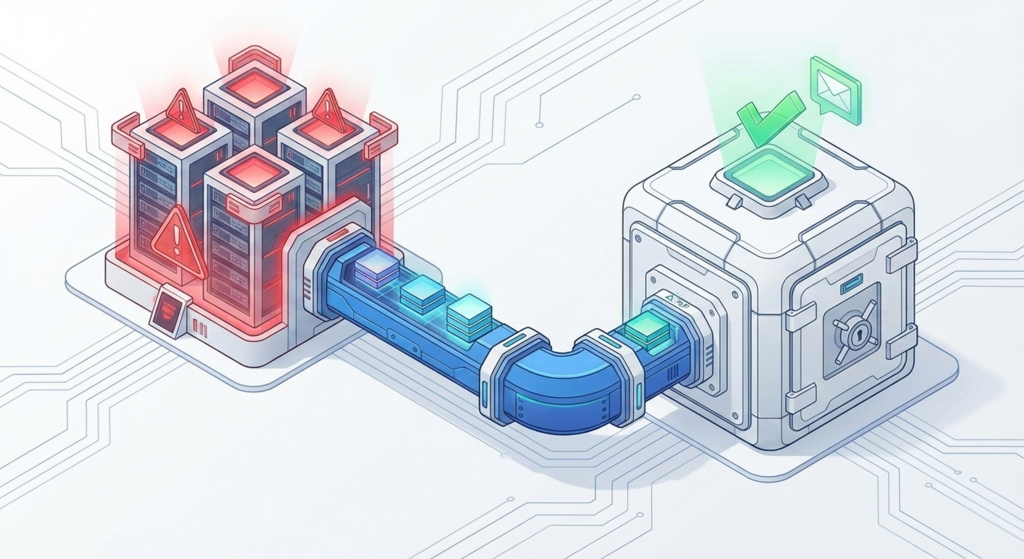

Two changes carry most of the load: optional SES-backed run receipts, and a hardened export path (timeouts + multipart uploads for large log streams). Notifications make the boundary observable, multipart exports make the evidence durable.

The goal is to close the loop: run -> artifacts -> verification, with receipts emitted at each boundary. This keeps each run measurable, auditable, and cost-bounded.

A run is considered complete only when:

- ECS service scaled to 0

- ElastiCache deletion initiated

- Metrics and logs exported to S3

- Post-boundary verification confirms nothing is still running

Implementation (code + Terraform) lives in pull request #5: SES email notifications + multipart S3 log exports.

Email notifications (optional, Amazon SES-backed)

These emails are run receipts, not incident alerts: started, scheduled, complete, verified. They make the lifecycle boundary visible to both humans and automation.

In a production environment, the same boundaries would typically publish to Amazon SNS and route to ChatOps (Slack) or incident management (PagerDuty). SES is used here as a portable default and a simple audit trail for an ephemeral benchmark lab.

Because the boundary is driven by EventBridge schedules, the notification sink stays interchangeable: SES for portability here, SNS/webhooks in a production pipeline.

When enabled, the lab sends:

- Test Started: sent by the scheduler Lambda as soon as it schedules the shutdown/verify timers (Amazon EventBridge scheduled rule).

- Test Complete: sent by the shutdown Lambda after it scales ECS to 0, starts ElastiCache deletion, and exports metrics/logs to S3.

- Verification Warning / OK: sent by the verification Lambda 15 minutes after the scheduled shutdown time to confirm whether ECS and ElastiCache are still running.

Warnings mean the environment is still running past the boundary, which is both a cost risk and a benchmark integrity risk.

Enablement is deliberately explicit: notifications stay disabled unless both variables are set (via terraform.tfvars / tfvars):

notification_email: recipient email addressnotification_ses_identity_arn: verified SES identity ARN (required whennotification_emailis set)

Implementation detail: Terraform passes NOTIFICATION_EMAIL and SES_IDENTITY_ARN into the three Lambdas. The SES identity ARN provides the region and sender identity (domain identity -> aws-elasticache-lab@{domain}, email identity -> that address), emails are sent via SES SendEmail.

Durable exports under higher load

As load increases, exports stop being an afterthought. The run has value only if the artifacts are captured and reviewable, otherwise it's anecdote. This is the same theme as Beyond Documentation: Building a Data-Driven Test Lab for ElastiCache: evidence beats assumptions.

In a production logging pipeline, CloudWatch Logs would typically stream via subscription filters to Kinesis Data Firehose (landing in S3), or be exported asynchronously via export tasks. This lab is intentionally ephemeral and run-scoped, so it performs a shutdown-time "sweep" to produce one consolidated log artifact per run and avoid keeping streaming infrastructure running between tests.

- Timeout budget: the shutdown Lambda has a 300-second timeout. Under heavier runs, CloudWatch Logs export can be slow, the extra budget reduces the chance of losing artifacts to a timeout (Lambda function timeout). The scheduler and verifier remain short (60 seconds) to keep them control-plane only.

- Sweep + multipart log exports: logs are written to Amazon S3 as plain text. Instead of relying on a single PutObject (risk: memory spikes, timeouts, or truncated exports), the shutdown Lambda performs a bounded sweep via FilterLogEvents and uploads in an S3 multipart upload once the in-memory buffer reaches 6 MiB (minimum is 5 MiB), then completes the upload.

This keeps the evidence pipeline stable as load grows: more ECS tasks should translate into larger artifacts, not missing artifacts.

Why this matters

Benchmarks that can't be reproduced, audited, and cost-bounded shouldn't drive architecture decisions. If the harness can reach the boundary state but can't reliably stop and export, the numbers aren't defensible - and the cost exposure is uncapped. In cloud architecture, a process that cannot guarantee its own termination is a liability.